Direct chat with LLM from address bar in Firefox

April 29, 2025

It's no secret that LLMs have largely replaced search engines in the last few years. However, using them as quickly as a search engine has remained a bit cumbersome for me. My workflow has been as follows:

- if not in browser, navigate to it using Alt + Tab shortcut

- new tab with Ctrl + T

- navigate to LLM website of choice - this was chatgpt.com since its launch till Claude 3.5 Sonnet, claude.ai till Gemini 2.5 Pro, and gemini.google.com till now.

- write and run query

Not a terrible workflow, but it's one step longer than the traditional search workflow that omits the step 3. Moreover, if the internet speed is lagging, waiting for the LLM website to load can take a few awkward seconds. This can be very annoying, since I may have to wait twice - for the web to load, and for the LLM to answer. In the traditional search workflow, I wait only once (okay, maybe more if I open any of the results, but at least I receive some response after only one wait period.)

Luckily, this workflow can be optimalised.

Url to chat

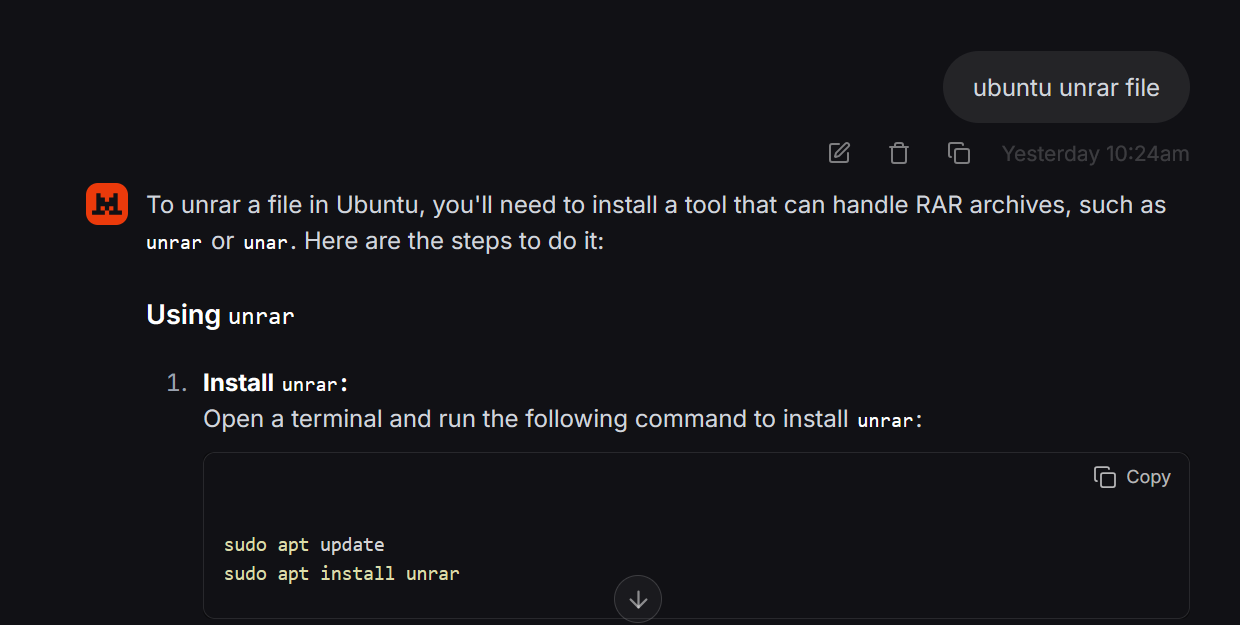

Some LLM provider support starting a chat directly from url, e.g. visiting https://chat.mistral.ai/chat?q=ubuntu unrar file opens Mistral's Le Chat and starts a chat with user's message ubuntu unrar file. The model starts responding immediately, no need to press additional Enter.

This is supported also in ChatGPT ( https://chatgpt.com/?q=ubuntu unrar file ) and Claude ( https://claude.ai/new?q=ubuntu unrar file ), however, with Claude the chat is only pre-filled and it's necessary to send the message manually. I tried to find similar functionality in Gemini, but without success.

Since Firefox supports adding custom search engines, we can use this to access the LLMs directly from the address bar!

Custom search engine

To add a custom search engine, we need to follow these steps (source):

- go to

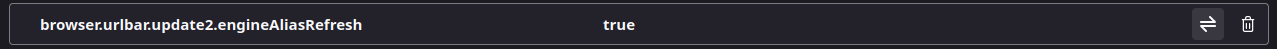

about:configand set propertybrowser.urlbar.update2.engineAliasRefreshtotrue

- in

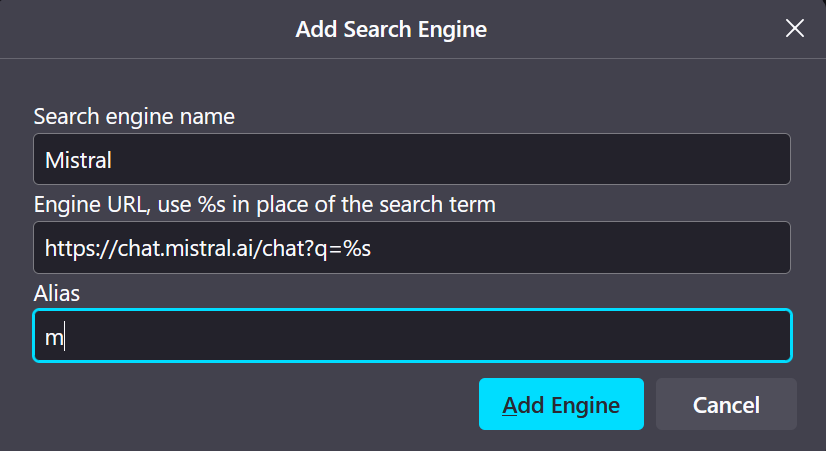

about:preferences#searchthere appears a new Add button - we can use it to add a new search engine - to add e.g. Mistral as a search engine, we use url

https://chat.mistral.ai/chat?q=%s- here%srepresents the search term

As an alias, I like to use simply one letter, in this case m. The more common way is to use an alias like @m or !m (! is used in DuckDuckGo bangs), however, writing a special character can be time-consuming when in flow. I use this convention also with search engines, e.g. writing g ubuntu unrar file searches the query ubuntu unrar file on Google instead of my default search engine. The caveat is that the queries that start with a single letter will be trimmed and sent to a different search than intended, however, I think this happened to me only a handful of times during the years I've been using the one letter alias convention.

Now, when we type m ubuntu unrar file in the address bar, we are sent directly to new chat and immediately receive a response.

I chose Mistral as the example because its flash answers are perfect for these simple queries that I know have a simple answer that I'm lazy or ignorant to know by myself. Also, Mistral seems to work even without being logged in.

This setup works also for at least ChatGPT (format url https://chatgpt.com/?q=%s) and Claude (format url https://claude.ai/new?q=%s). The one letter alias convention is a bit problematic here not only because both the models' names start with c, but also searching for queries related to C programming language may get broken if not careful. But since I don't work with C, I am not afraid to use c as the alias for ChatGPT. The C programmers among us will have to think of something else.

Finally, there probably exists a simpler option than all of this - to use the built-in shortcuts in Claude Desktop or ChatGPT Desktop. But since I prefer easy interchange between searching with search engines and quick chat with an LLM, the address bar way is the one I prefer. In theory, the brief moment between the realisation I need to search and typing the first letter in the address bar gives my mind enough time to decide which option will be the fastest to get to the result I need.